Technology Maturity and Risk Reduction (TMRR) Phase

Agile Fundamentals Overview

Agile Glossary

MATERIEL SOLUTION ANALYSIS (MSA) PHASE

Materiel Development Decision (MDD)

Analyze Requirements

Analysis of Alternatives (AoA)

Develop Acquisition Strategy

Market Research

Cost Estimation

Risk Management

TECHNOLOGY MATURITY AND RISK REDUCTION (TMRR) PHASE

Milestone A

Mature Requirements

Competitive Prototyping

Systems Engineering

Mature Acquisition Strategy

Contract Preparation

Risk Management

Request for Proposal

ENGINEERING & MANUFACTURING DEVELOPMENT (EMD) PHASE

Milestone B

Manage Program Backlogs

Release Execution

Manage Contracts

Metrics

Scaling Agile

Mature Acquisition Strategy

As the program progresses through the Technology Maturation and Risk Reduction (TMRR) Phase, the acquisition strategy must be refined with greater detail about how the program will be executed during the Engineering and Manufacturing Development Phase. In parallel, the program office must finalize its strategies for systems engineering, test, and sustainment. Depending on the program’s documentation approach and MDA direction, this information can be presented in the form of standalone documents, integrated in a tailored version, or structured at a larger capstone level (e.g., portfolio) with appendices for each program or release.

Transition from Technology Maturity and Risk Reduction (TMRR)

As identified earlier in the TMRR Phase, programs should involve multiple vendors in risk reduction activities. Some early Agile adopters have multiple vendors develop risk reduction releases that address a key feature or prototype planned functionality. These efforts provide valuable hands-on experience and help government personnel (acquirers and users) understand key processes, roles, and deliverables involved in the various Agile practices employed by vendors. They help to identify technical and programmatic risks and strengthen cost and schedule estimates, provide insight into the expertise of potential vendors to develop the complete system, and give the vendors insight into the program requirements, CONOPS, and technical environment. The government can then leverage the insight gained during this phase to shape its acquisition strategy on how to best structure and execute the program along with contract strategies for incentives and other RFP details.

The program’s tailored acquisition strategy should capture the Agile practices it plans to adopt. It should reinforce core the Agile software development tenets of iterative planning at the start of each release and sprint along with retrospectives at the end of each to identify opportunities to improve going forward. The strategy should empower small teams to iteratively develop releases per priority backlog items, while actively collaborating and informing key stakeholders of progress and issues.

During the TMRR phase the program identifies personnel to fill key government roles and solidifies the culture needed to embrace a more dynamic approach to developing capabilities. The program manager should clearly understand the expectations of acquisition and operational executives, to include decision authorities, reporting, strategies, resources, and issue resolution processes.

While Agile software development values working software over planning documentation, program stakeholders must regularly examine and adjust their strategies and methods throughout the lifecycle. Government and industry stakeholders should hold a retrospective after each release to discuss what went well and what areas need improvement going forward. The program should update documentation to reflect the disciplined approach to managing requirements, changes, technical baselines, user engagements, and contractor incentives, among others.

Structure the Program to Enable Agile Effects

Structuring an IT program for Agile development differs significantly from structuring a program around a traditional development methodology. Traditional acquisition programs usually have discrete acquisition phases driven by milestone events to deliver a large capability. Agile is more dynamic and requires a program structure that supports multiple, small capability releases. Given the radical differences between Agile and a traditional development model, programs often view this activity as too complicated and therefore fail to consider the Agile development process as an option.

Creating an appropriate structure for an Agile program represents a fundamental first step in developing a strategy for program-level adoption of Agile. This activity requires the program to make significant adjustments to the traditional DoDI 5000.02 program structures and acquisition processes so that they support Agile development timelines and objectives. The DoDI 5000.02 acquisition policy places heavy emphasis on tailoring acquisition models to meet program needs. Frank Kendall, the Under Secretary of Defense for Acquisition, Technology, and Logistics (AT&L) outlined in the 2012 Defense AT&L article “Optimal Program Structure”:

“…each program be structured in a way that optimizes that program’s chances of success. There is no one solution. What I’m looking for fundamentally is the evidence that the program’s leaders have thought carefully about all of the [technology, risk, integration, other] factors.”

Despite this guidance, programs often do not know how to effectively tailor programs to receive approval from process owners. It takes years of experience to truly understand the nuances involved in tailoring an acquisition program.

A core theme throughout DoDI 5000.02 is the tailoring of program structures and acquisition processes to meet the needs of the individual program. The policy includes several acquisition models to consider, such as Model 2 for defense-unique software, Model 3 for incrementally fielded software, and hybrid model B for software dominant programs from DoDI 5000.02. This tailored model provides additional levels of details and supporting guidance for each activity within each phase. Best practices for agile acquisition include structuring the program to:

- Emphasize an outcome-based approach

- Consider the entire acquisition lifecycle for the acquisition strategy

- Ensure that contracts support frequent delivery of capability, visibility into contractor performance, continuous testing, and accountability for results

Programs must be designed in such a way that they meet all the DoDI 5000.02 statutory and regulatory requirements, and are also executable and marketable to senior acquisition executives who may be unfamiliar with the details of the Agile process. The following sections describe a recommended approach to structuring an Agile DoD program, starting with the process to structure an Agile release and building on this concept to develop a fully tailored DoD Agile acquisition program.

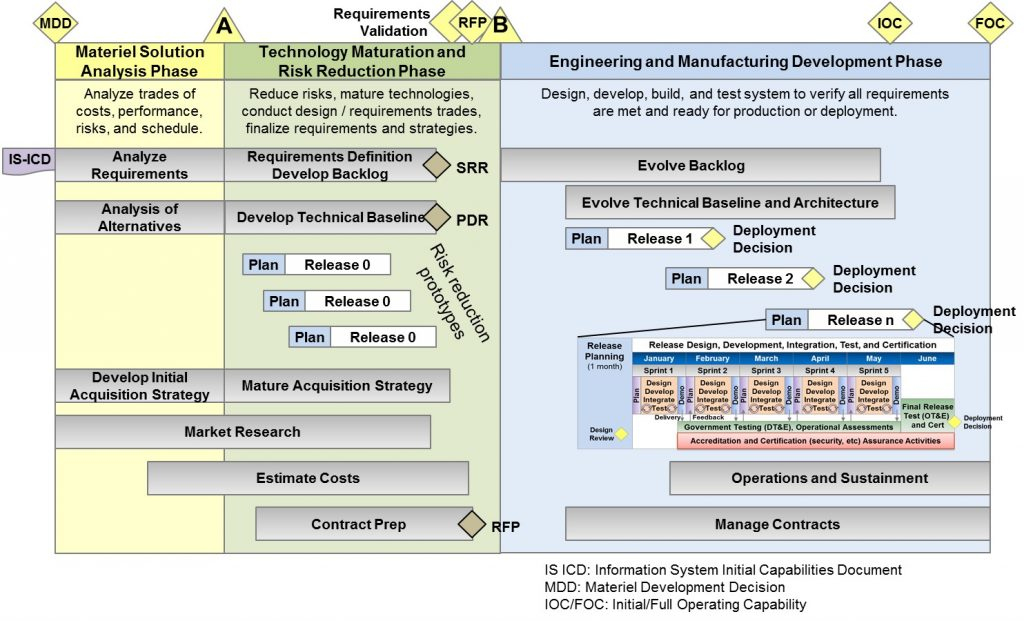

Agile Releases

When developing the structure for an Agile program, the PMO should first decide how to structure its releases. The release represents the core element of the program structure, guiding how frequently the program delivers capabilities to the end users. The length of each release depends upon operational, acquisition, and technical factors that should be discussed with stakeholders across the user and acquisition organizations. As a general guideline, most releases should take less than 18 months, with a goal of six months (as championed by US CIO, GAO, and FITARA).

Shorter release cycles have several benefits, the most important being that the program deploys useful capability to the end-user faster. Shorter release cycles also reduce or minimize the amount of change that can become bottled-up or compounded in the requirements pipeline, earlier and more frequent feedback opportunities, and shorter timelines to incorporate corrective actions. Involving the test community early by fielding smaller releases of capability also allows the acquisition community to better understand the test community’s sensitivities and serves to reduce potential conflict and contention downstream. However, program offices should also balance the release timelines with the operational community’s ability to absorb the frequency of releases and deployed capabilities. This often requires working with functional leadership to redesign key acquisition processes and associated stakeholder involvement around 6–12 month releases rather than 5–10 year increments. Once a contractor(s) is selected, the release timelines can be finalized.

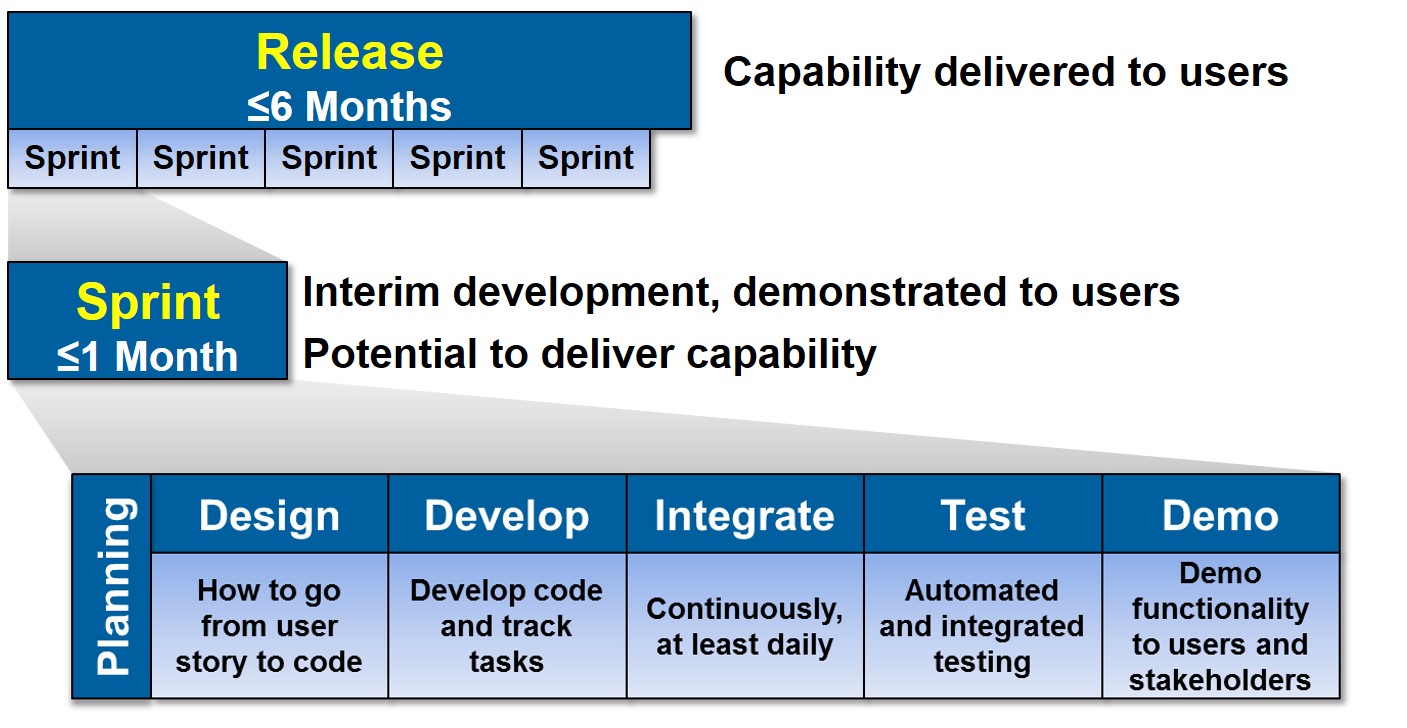

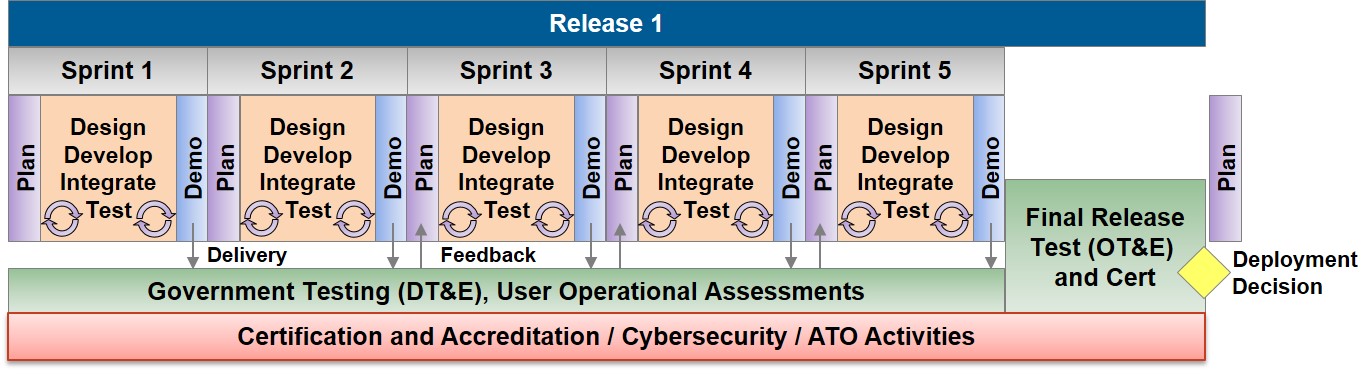

Each release comprises multiple sprints and a final segment for release testing and certification. Each sprint, in turn, includes planning, design, development, integration, and test, culminating in demonstration of capabilities to users and other stakeholders. The development team conducts these activities for multiple units of work (user stories) within a sprint. Testing by the developer and government is heavily automated. The development team demonstrates capabilities to users and testers after each sprint. Developers may be required to deliver interim code to the government at the end of each sprint or after multiple sprints. The government or an integration contractor can integrate the interim code into its software environment for further testing and operational assessments. The figure below shows a potential 6-month release structure with five monthly sprints.

Each release comprises multiple sprints and a final segment for release testing and certification. Each sprint, in turn, includes planning, design, development, integration, and test, culminating in demonstration of capabilities to users and other stakeholders. The development team conducts these activities for multiple units of work (user stories) within a sprint. Testing by the developer and government is heavily automated. The development team demonstrates capabilities to users and testers after each sprint. Developers may be required to deliver interim code to the government at the end of each sprint or after multiple sprints. The government or an integration contractor can integrate the interim code into its software environment for further testing and operational assessments. The figure below shows a potential 6-month release structure with five monthly sprints.

Notional Six Month Release Structure

Notional Six Month Release Structure

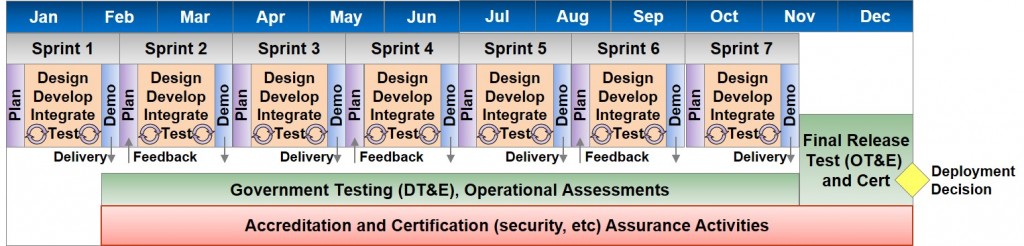

Notional 12-Month Release Structure

Notional 12-Month Release Structure

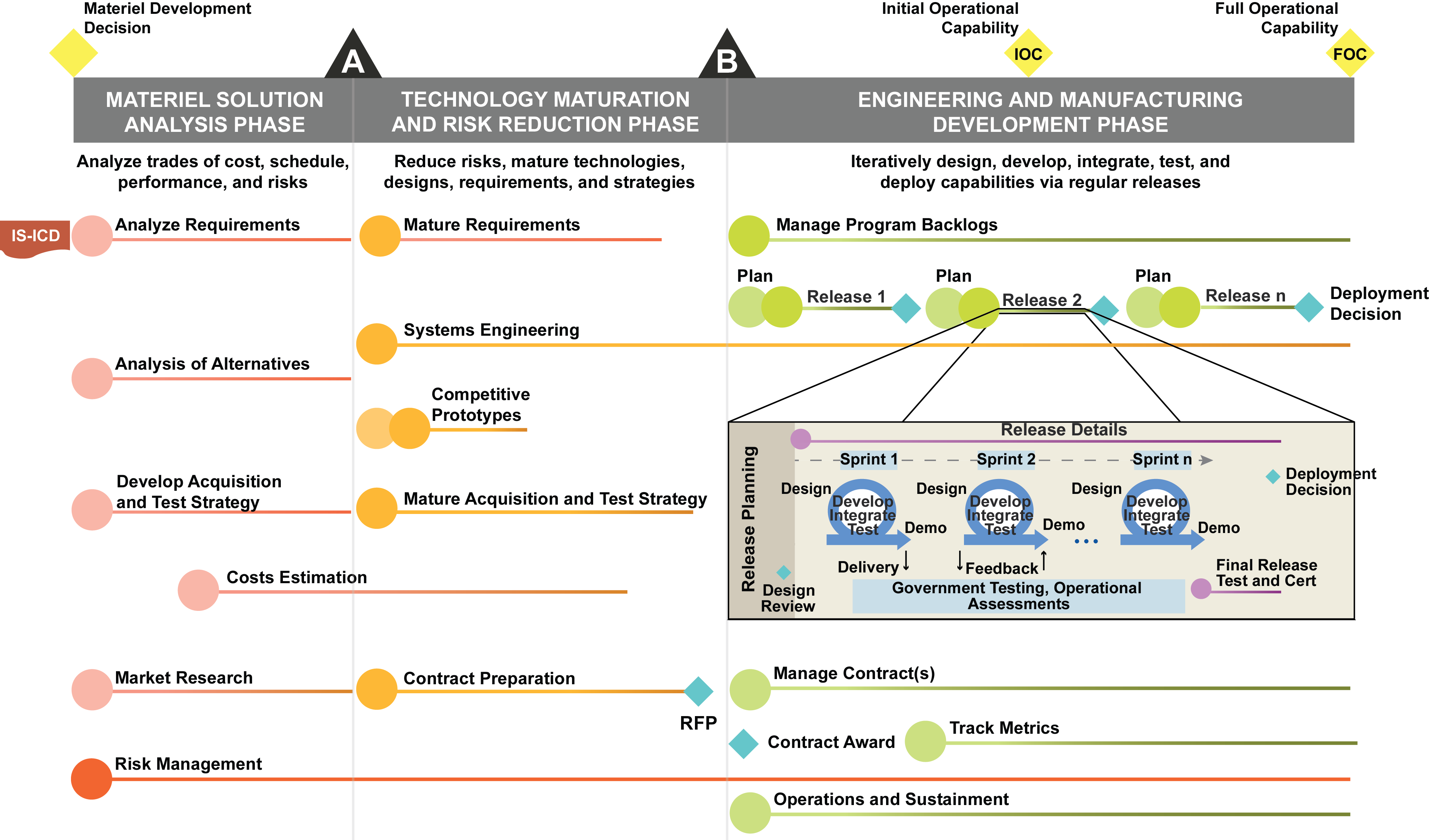

Potential Agile Program Structure

After determining a release strategy, each effort should tailor its programmatic and acquisition processes to effectively enable Agile development practices. The figure below illustrates one potential structure at a top level. In this approach, requirements, technology, and architecture development are continual processes rather than sequential steps in early acquisition phases. Each release involves a series of sprints to iteratively develop and test a capability, ultimately leading to capability deliveries to the user every 6 months upon approval. Instead of bounding development via a series of increments with Milestones B and C at each end, development thus becomes a continual process. Semi-annual reviews with senior leadership and other key stakeholders ensure transparency into the program’s progress, plans, and issues. Programs provide additional insight to executives via monthly or quarterly reports and other status meetings.

Test Planning

The testing life cycle and Agile testing cycle involve the same activities or phases, although there are significant differences in how the phases are planned, executed, and delivered. Iterative development involves cycling through a set of steps or activities such as initiate, plan, design, develop, test, and release. One of the most fundamental differences in testing on Agile projects is the extensive use of automation and testing at the software unit and component levels.

Agile Testing Matrix, Scaled Agile Framework

Agile Testing Matrix, Scaled Agile Framework

Product quality for an Agile team is created, not tested in, and is the responsibility of the entire team on Test Driven Development (TDD) and Acceptance Test Driven Development (ATTD). Understanding the differences and expectations of testing on Agile projects is essential to achieving desired project and product quality.

Planning

The government/contractor agile team should conduct detailed test planning for releases and sprints. The plan gives an overall picture of the system-level/cross-functional testing to ensure the test environment, tools, and other resources needed outside the scope of each team’s sprint planning are covered. A normal sprint plan that only covers one or two sprints ahead is not enough for large-scale enterprise system built with Agile techniques. When planning a system test environment that may include prototype hardware (which can take long time to deliver) or other equipment, installation and configuration of that hardware are essential.

Sprint/ release test planning must identify:

- What should be tested and why? (Depending upon the goals of the sprint/ release, some areas will be tested and others excluded.)

- Where will the item be tested? Which test environment will be used for which types of testing?

- Who is in charge of testing different parts of the product?

- When can different test activities as well as different parts of the product be tested?

- How will the product be tested? What test approach is needed, which methods, techniques, tools, and test data are necessary?

- What dependencies on and relationships to other components, third-party products, technology, etc., does the item have?

- What areas have the most and fewest risks? Does the strategy reflect the product risks?

- How much time is available as well as needed to execute the strategy?

- What is the “definition of done” for the sprint/ release?

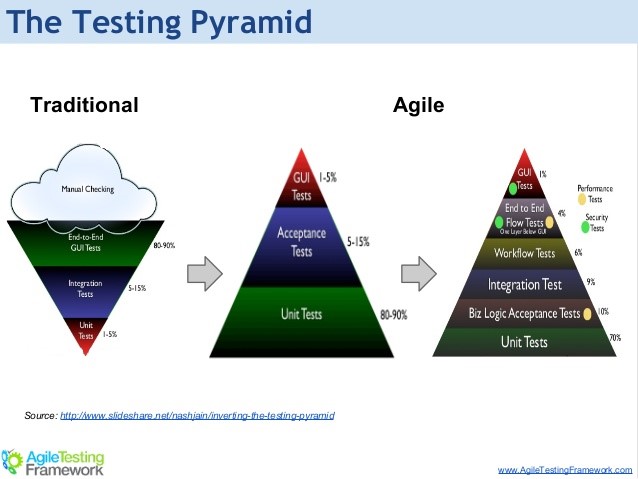

Since automation is a key facet of Agile testing, planning for automation is critical. Agile techniques such as TDD and continuous integration depend on a strong plan for automated testing. Extensive automation at the unit/ component level of development as well as automated tests associated with builds and integration support the rapid delivery of high-quality code for Agile projects. As illustrated in the figure below, the Agile test pyramid, developed by Mike Cohn in his book Succeeding with Agile, demonstrates the role of automation in Agile testing and reflects the extensive use of unit/component level testing expected for Agile projects.

Agile Testing Framework (Source: Mike Cohn)

Agile Testing Framework (Source: Mike Cohn)

Planning for Large-Scale Enterprise Agile Projects

The government must take additional considerations into account when planning large-scale enterprise projects. Typically, large projects call for more test coordination integration activities, and even for additional roles such as test coordinators, depending on the scope of the project. To accommodate the enterprise testing requirements for large-scale systems and federal government systems, the Agile model must be modified to accommodate large-scale integration at the system level, specialized security testing, and system-level performance testing. Additionally, standards and regulation testing, scrum of scrums, hardening, and stabilization sprints must be defined. See the section on scaling testing for larger scale projects for additional information on specific testing considerations.

Test Environment Planning

Environment planning for enterprise Agile projects deals with many of the same concerns as traditional environment planning: the need to build the environment for all types of anticipated testing and the need for test data that must be created, brought from production, or simulated. If environments have multiple users, coordination efforts are also a consideration. In the end Agile project test environments must support the need for automated unit and component testing, and potentially for test driven development and continuous integration. Ideally, test environment configuration matches production as closely as possible. If DevOps infrastructure management automation is used, the same infrastructure-as-code scripting should be used for test environment management.

Automation Planning

Automation is as critical to Agile testing. Iterative development means that the contractor builds capabilities a little at a time, which means that when something is built it could break something else, which in turn requires a check to determine if the other capability still works. This means that automated tests become central to continuously developing and releasing code. A multi-tiered automation program – unit, component, middle tier, front end – is essential to Agile projects. Under Test Driven Development, which is a common Agile methodology, the development team defines the test criteria up front for, who produce test scripts to meet those criteria before they develop any code.

An automation framework that can support a multi-tiered approach to testing is critical. This framework, starting with unit level automated tests and moving forward through the other development activities, should promote test reuse and the use of a common platform and tools to ensure an effective test strategy.

Test driven development and continuous integration are both techniques used heavily in Agile projects to promote extensive use of automated test scripts at the unit and component level. Per the Agile test pyramid, many automated tests should be written at the unit/component test level, preferably using test driven development. These tests, with scripts written in the same language as the source code, become the basis for the regression tests executed with each check-in and build for continuous integration or deployment. The tests in the second layer of the pyramid are those that evaluate business logic while still not requiring a Graphical User Interface (GUI). Since GUI tests require more maintenance they should be kept to a minimum and mainly be used to validate the user interface, not to test the business logic.

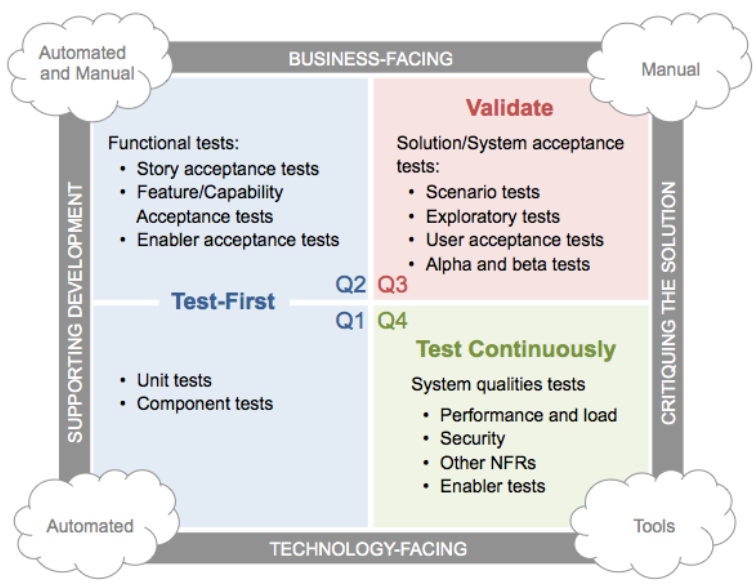

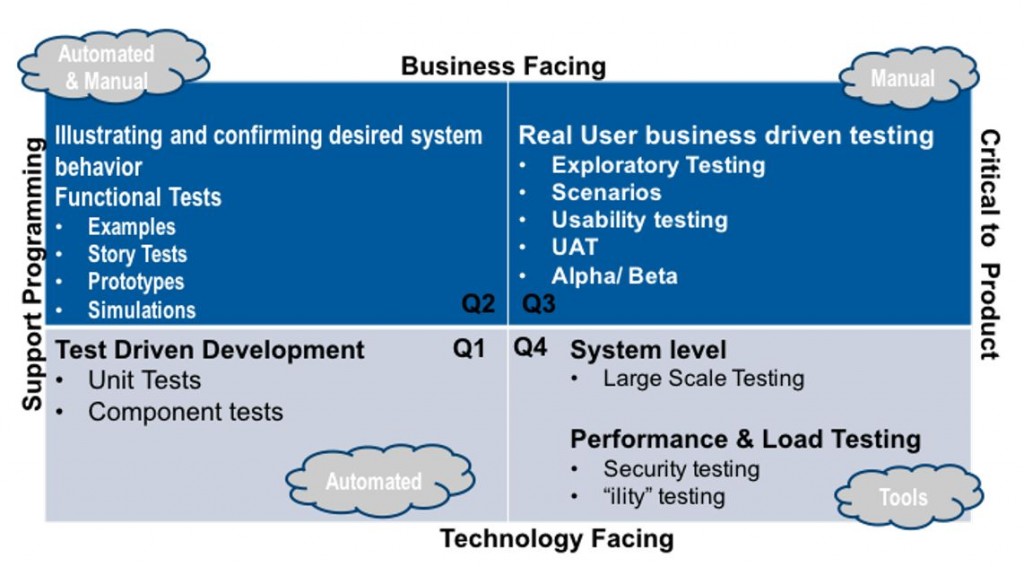

Developing a complete Agile software testing strategy requires consideration of all the types of testing feasible for the system as well as those specific tests that must be automated. The Agile quadrants diagram (Source: Lisa Crispin) illustrates a good approach for testing teams to use when considering their test and automation test strategy. The diagram shows the spectrum of testing that can/ should be done to deliver high-quality software in an iterative environment. Calling out the automation versus manual nature of the activities helps teams to clearly see the role that automation should play in each quadrant. The test team must know when to use the quadrants and how to implement them.

Agile Testing Quadrants

The quadrant is a guide to use during release and iteration planning, so that the whole team starts out by thinking about testing: how to plan it and the resources needed to execute it. The numbers associated with the quadrant have no specific meaning or order; they are just a way of designating each quadrant. When considering their testing strategy, especially in relation to automation, programs have several options.

- Many projects start with Q2 tests, because those are where you get the examples that turn into specifications and tests that drive coding, along with prototypes and the like.

- Others might start with a high risk area such as performance testing modeling (which is in Q4) because that was the most important criterion for the feature.

- If requirements are uncertain, Q3 with exploratory testing would be a viable option.

- Q3 and Q4 testing require that some code be written and deployable, but most teams iterate through the quadrants rapidly, working in small increments.

Design/Development – Testing techniques, approaches, considerations

The need to provide visibility and quick status updates about product quality prompts Agile projects to utilize a broader array of testing techniques and approaches than traditional waterfall programs. Research shows that testing from many different perspectives and techniques has a higher probability of revealing defects and problems. When designing and developing tests for Agile projects, the program should consider selecting an extensive set of the techniques from the list below to increase the chance of finding defects as soon as possible.

- Testing in pairs: pairs testers with business owner/ developer, one controlling keystrokes, the other providing suggested inputs or scenarios, taking notes, etc.

- Exploratory testing: involves simultaneous learning, test design, and test execution.

- Incremental tests: starts out with minimal detail and builds as more information becomes available.

- Checklist driven testing: provides for quick development/ design of tests for standard activities.

- Scenario-based testing: bases the test design on user business scenarios.

- Automated generation of test data : creates test data using automated means to generate production like data without many of its associated issues.

- Frequent regression testing built into the automated build and check in sequence for the code: performs automated regression testing on each changed component as a part of the build process.

Additional Test and Evaluation References

- Additional Test and Evaluation References

- Agile Testing Framework

- Test and Evaluation for Agile by Dr. Steve Hutchinson

- Shift Left by Dr. Steve Hutchinson, Defense AT&L Magazine

- Shift Left! editorial by Dr. Steve Hutchinson, ITEA Journal, June 2013

- Agile Testing Strategies by Scott Ambler and Associates

- Interview with Lisa Crispin: Whole-Team Approach to Quality, A1QA

- Agile Testing: A Practical Guide for Testers and Agile Teams by Lisa Crispin and Janet Gregory

- More Agile Testing: Learning Journeys for the Whole Team by Lisa Crispin and Janet Gregory

- Top Ten Books Every Agile Tester Should Read

- Agile Testing Downloads by Bob Galen

Videos

- Agile Testing, Google TechTalks

- Agile Testing Best Practices, Excella Consulting

- Test Driven Development Overview, ScrumStudy

References:

- DoD Acquisition Strategy Template, Apr 2011

- Program Management, Defense Acquisition Guidebook

- Stakeholder Needs and Expectations Planning Your Agile Project, William Broadus III, Nov 2013

- The Need for Agile Program Documentation, LTC TJ Wright, Jun 2013

- DoD Risk, Issue, and Opportunity Management Guide, DASD/SE, Jan 2017

0 Comments