Engineering and Manufacturing Development (EMD) Phase

Agile Fundamentals Overview

Agile Glossary

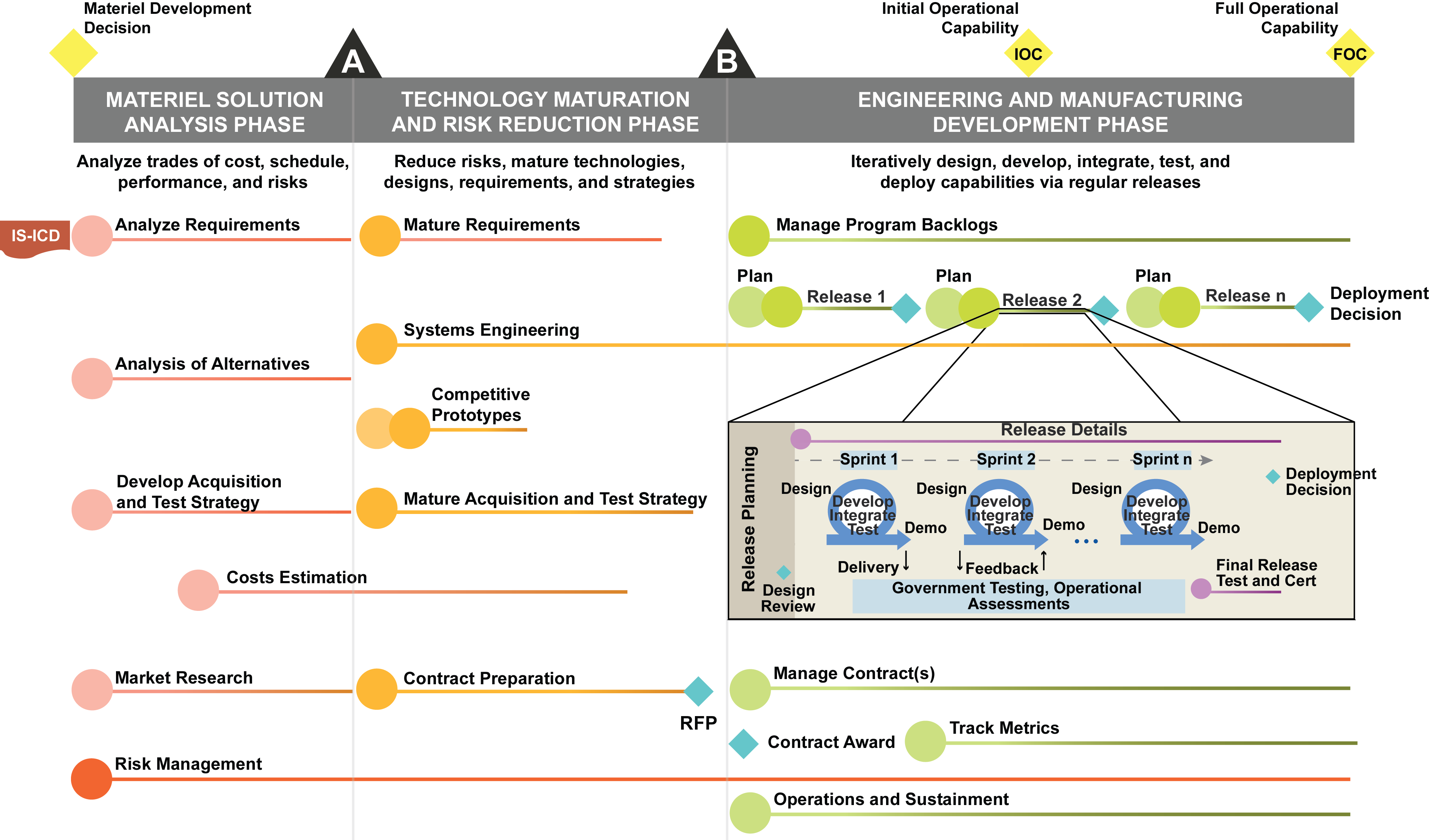

MATERIEL SOLUTION ANALYSIS (MSA) PHASE

Materiel Development Decision (MDD)

Analyze Requirements

Analysis of Alternatives (AoA)

Develop Acquisition Strategy

Market Research

Cost Estimation

Risk Management

TECHNOLOGY MATURITY AND RISK REDUCTION (TMRR) PHASE

Milestone A

Mature Requirements

Competitive Prototyping

Systems Engineering

Mature Acquisition Strategy

Contract Preparation

Risk Management

Request for Proposal

ENGINEERING & MANUFACTURING DEVELOPMENT (EMD) PHASE

Milestone B

Manage Program Backlogs

Release Execution

Manage Contracts

Metrics

Scaling Agile

Manage Program Backlogs

Once a contractor is selected, the development team reviews and refines the program and release backlogs with the Product Owner and PMO. This team is best suited to estimate the complexity and schedules to complete each user story. Over time, as the team develops releases, the fidelity of its estimates will improve as the members gain a better understanding of the team’s velocity, refinement of the backlogs, and estimation techniques.

Introduction to Managing Production Backlogs

The Product Owner regularly grooms the program backlog with the help of many stakeholders. Periodic meetings independent of the ongoing release may be required to refine the priority user stories with the users, engineers, developers, architects, testers, and other stakeholders. The use of collaborative online tools will ensure stakeholders have insight into the backlog details and provide inputs and feedback. As with many areas of Agile, a small, empowered, high-performing team will have better success than efforts that involve many people in daily operations. While five to nine is a common team size cited in Agile forums, the best size depends on the program environment.

The Product Owner, actively collaborating with users and stakeholders, is responsible for grooming the backlog to ensure the content and priorities remain current as teams receive feedback and learn more from developments and external factors. Users and development teams may add requirements to the program or release backlog or shift requirements between them. The release and development teams advise the Product Owner on the development impacts of these decisions, while users advise the release team about the operational priorities and impacts. Dependencies must be understood because the ability to develop capability to address a specific user story may depend on externally delivered capabilities (e.g., from other systems), internally developed capabilities already developed or planned. Some programs may use a Change Control Board to aid in some of the larger backlog grooming decisions.

In an Agile environment, users often translate requirements into epics and user stories to concisely define the desired system functions and provide the foundation for Agile estimation and planning. These stories and epics describe what the users want to accomplish with the resulting system. User stories help ensure that users, acquirers, developers, testers, and other stakeholders have a clear and agreed-upon understanding of the desired functions. They offer a far more dynamic approach to managing requirements than large requirements documents. Development teams periodically review the stories on the backlog to ensure the fidelity of details and estimates. Engineers may also write user stories to cover underlying characteristics of security, technical performance, supportability, training, or quality. Interfaces with other systems are usually captured as user stories.

User stories require little maintenance; they can be written on something as simple as an index card. A common format for a user story is:

“As a [user role], I want to [goal], so I can [reason].

For example, “As a registered user, I want to log in so I can access subscriber-only content.” User stories should have the following characteristics:

- Concise, written descriptions of a capability valuable to a user

- High-level description of features

- Written in user language, not technical jargon

- Contain information to help teams estimate level of effort

- Worded to enable a testable result

- Traceable to overarching mission threads

Each user story should be associated with defined acceptance criteria to confirm when the story is completed and working as intended. This requires the stakeholders to have a clear “definition of done” to ensure common expectations. Acceptance criteria consist of a set of pass-fail statements that specify the essential functional requirements in verifiable (measurable) terms. Defining acceptance testing during the planning stages enables developers to test interim capabilities frequently and rework them until they achieve the desired result. This approach also streamlines independent testing following development of a release. Examples include:

“A registered user can log-in 95% of the time.”

“While logged in subscriber-only content is available 100% of the time to registered users.”

The development team, users, and other stakeholders may use storyboards and mockups to help visualize the system use and features.

Teams update the backlogs based on what the users and developers learn from demonstrated and fielded capabilities. The new items may include making fixes to software or generating new ideas. As operations change, teams may add or change requirements and user stories, both in content and priority. For example, the team may add integration items to the backlog as the program interfaces with other systems. Systems engineers and enterprise architects may add items that support the release integrity or technical underpinnings of capability delivery and information assurance. Ideally, teams should address issues discovered by government and contractor testers within the next sprint or release, but they may add those issues to a backlog based on the scope of the fix.

Additional references for user stories:

- User Stories by Agile Alliance

- User Stories: What is a User Story?, Mike Cohn

- Non-functional Requirements as User Stories, Mike Cohn

- 200 Examples of User Stories, MoutainGoat Software

- User Stories Applied, by Mike Cohn

- Writing Effective User Stories, GSA Tech Guide

- User Story Examples, GSA Tech Guide

- 5 Common Mistakes We Make Writing User Stories, Scrum Alliance

- 10 Tips for Writing Good User Stories, Roman Pichler

- How to Write Great Agile User Stories, Sprintly

- The Easy Way to Writing Good User Stories, Code Squeeze

- User Stories: An Agile Introduction, Agile Modeling

- Write a Great User Story, Rally

- Your Best Agile User Story, Alexander Cowan

Active User Involvement in Development

A close partnership between users and materiel developers is critical to the success of defense acquisition programs and is a key tenet of Agile. Users must remain actively involved throughout the development process to ensure a mutual understanding across the acquisition and user communities. While most users maintain operational responsibilities associated with their day job, the more actively they can engage in the development, the better chances for success. Operational commanders must make a commitment o to allocate time for users to engage in development activities.

Users share the vision and details of the concepts of operations (CONOPS) and the desired effects of the intended capabilities. Through ongoing discussions, the program office and developers gain a better understanding of the operational environment, identify alternatives, and explore solutions. Users can then describe and validate the requirements, user stories, and acceptance criteria. The program office must make certain that the requirements can be put on contract and are affordable given funding, schedule, and technological constraints. Testers should also take an active part in these discussions to ensure common expectations and tests of performance.

In situations where primary users are not available to engage with the Agile team on a regular, ongoing basis, the end users can designate representatives to speak on behalf of the primary users.

User Forums

User forums enhance collaboration and ensure that all stakeholders understand and agree on the priorities and objectives of the program. The forums can serve as a valuable mechanism for gathering the full community of stakeholders and fostering collaboration. They give users an opportunity to familiarize developers with their operational requirements and CONOPS and to communicate their expectations for how the system would support these needs. Continuous engagement of users, developers, acquirers, testers, trainers, and the many other stakeholders at these forums also enables responsive updates and a consistent understanding of the program definition.

Suggestions for successful user forums include:

- Hold regularly scheduled user forums and fund travel by stakeholders across the user community; alternatively, or in addition, provide for virtual participation.

- Arrange for developers to demonstrate existing capabilities, prototypes, and emerging technologies. These demonstrations give users invaluable insight into the art of the possible and the capabilities currently available. User feedback, in turn, guides developers and acquirers in shaping the program and R&D investments.

- Allow the full community to contribute to the program’s future by holding discussions on the strategic vision, program status, issues, and industry trends. Program managers should not rely on one-way presentations.

- Give stakeholders the opportunity to convey expectations and obtain informed feedback.

- Establish working groups that meet regularly to tackle user-generated actions.

- Hold training sessions and provide educational opportunities to stakeholders.

Often large-scale enterprise Agile systems may require the involvement of additional independent teams of testers that execute system-/enterprise-level testing and integration. Please refer to contract preparation for details on contracting for outsourced testing.

Key Questions to Validate Requirements Management

- What requirements documents does the program have?

- What requirements oversight board exists and what does it review/approve?

- Who is responsible for managing the program backlog?

- What is the process for adding, editing, and prioritizing elements on the backlog?

- How are requirements translated into user stories, tasks, acceptance criteria, and test cases?

- Are there clear “definitions of done” that the team and stakeholders agree upon?

- Are developers involved in scoping the next sprint (or equivalent)?

- Does the backlog include technical requirements from systems engineers, developers, and testers along with the user requirements?

- How frequently are the backlogs groomed?

- Is there an established change control process for the requirements backlog?

- Are architecture or system-of-systems requirements managed on the backlog?

- Are requirements/user stories/backlogs maintained in a central repository accessible by a broad user base, and is content shared at user forums?

- Does the product owner regularly communicate with the development team about the CONOPS?

- Are testers actively involved in the requirements process to ensure requirements are testable?

- Is there a mapping among requirements, user stories, tasks, and related items?

- Is there a mapping between release/sprint requirements and higher level program/enterprise requirements?

- Does the program have a dynamic process to regularly update, refine, and reprioritize requirements?

- Does each requirement/user story planned for the release/sprint have associated cost and acceptance/performance criteria?

- Are users actively engaged throughout the design, development, and testing process to provide feedback for each sprint?

- Is integration with lifecycle support processes and tools iteratively refined and evaluated as part of each sprint?

Additional references:

- IT Box

- Eliciting, Collecting, and Developing Requirements, MITRE Systems Engineering Handbook

- Analyzing and Defining Requirements, MITRE Systems Engineering Handbook

- Agile Software Requirements, Dean Leffingwell

- User Stories Applied: For Agile Software Development, Mike Cohn

- Requirements Engineering in an Agile Software Development Environment, Alan Huckabee, Sep 2015

- Agile Requirements Best Practices by Scott Ambler

- Requirements by Collaboration by Ellen Gottesdiener (See also the book by same name)

- Agile Requirements Modeling by Scott Ambler

- Agile Software Requirements by Dean Leffingwell

- Writing Effective Use Cases by Alistair Cockburn

- To slice stories, first make sure they are too big, Gojko Adzic

0 Comments